Image by awcreativeut

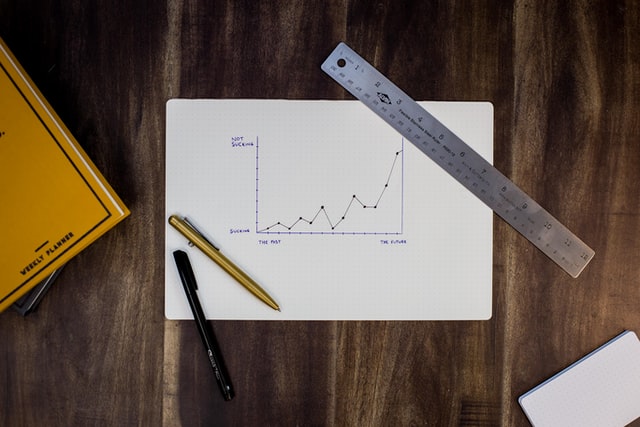

A blog post is in progress.

A Channel by merriam-webster refers to among other meaings

the bed where a natural stream of water runs

I will start this post by adopting the water channel analogy to explore how it relates with C# System.Threading.Channels.